Introduction to

the Full-Spherical Stereo

technology

3-D panoramic sound can be transmitted

in the stereo audio format

Since 26 February, 2024

Last update; 23 May, 2025

Introduction

In the conventional stereo sound system, only one-dimensional spatial information of sounds along with the left-to-right axis can be transmitted. To transmit two-dimensional or three-dimensional spatial information, special audio formats such as 'Dolby surround' and 'Ambisonics' are needed.

By using the Full-Spherical Stereo technology, 3-dimensional spatial sound can be saved to and transmitted by the simple stereo audio format. Transmitting immersive spatial sounds, therefore, does not need any special data format at all. Only in the recording process, a 4-track recording system is required.

In principle, a 4-point measurement is required to obtain spatial properties of waves in the 3-dimensional space.

Here, the spatial properties of a wave mean propagation direction and propagation speed of the wave for each frequency bin at the observation point. For more detail about the propagation direction and propagation speed, see Technical note.

A tetrahedral microphone system consists of 4 omnidirectional microphones (Fig. 1) can be placed at the observation point to capture acoustic signals. Spatial properties of the sound at the observation point are analyzed by using the recorded 4-track signals.

Fig. 1 Tetrahedral microphone system

Four omnidirectional microphones (Audio Technica, AT9903) are at the vertices of a tetrahedron.

Each side of the tetrahedron is 15 mm long.

Since the spatial properties of a wave are direction and speed of propagation, spatial information of sound at the observation point can be expressed by a 3-dimensional vector for each frequency bin as shown in Fig. 2. No matter how many wave sources exist, there are only one each of direction and speed of propagation at the observation point for each frequency bin. That is why the spatial properties of a wave can be represented by a single vector for each frequency bin.

If the spatial properties of the sound at the observation point can be accurately reproduced at the listening point, the listener can feel as if he or she is listening to the sound at the observation point.

Fig. 2 Spatial information of a wave

Spatial information of a wave can be expressed by a vector for each frequency bin.

The orientation and size of each arrow represent the propagation direction and speed

of each frequency bin, respectively.

In our technology, a 4-track audio signal captured by the tetrahedral microphone system is converted to a 2-channel audio data stream without losing the 3-dimensional spatial properties of the sounds.

Please wear stereo headphones and check the demonstration by clicking the thumbnail below. Stereo headphones are necessary. Once an image appears on the screen and you see "Click to start" or "Tap to start" on the screen, you can start the reproduction by a click or tap on the image. You can pause and resume the playback by clicking (tapping) the image. Under some network conditions, the screen may keep saying 'Loading data. Wait for a moment.' In such cases, clicking on the image may improve the situation.

During the reproduction, you can change panning of the full spherical image by moving the mouse pointer to the left or right on the image and you will notice that panning of the sound also changes. You can also control tilt (elevation from the horizontal plane) by the vertical motion of the mouse pointer.

Demo with a file "orimoto0.wav"

(Pan and tilt are available)

Playback of full spherical images on this site is implemented thanks to Three.js technology.

In the clip below, you can control 'roll' (rotation around the front-to-rear axis) by placing the mouse pointer at the corners of the image and 'tilt' is not available. Roll control on mobile devices is not guaranteed.

Demo with a file "fss00.wav"

(Pan and roll are available)

The sound files used in the clips above are "orimoto0.wav" and "fss00.wav." As the file extension indicates, they are stereo WAV files. If only one-dimensional spatial property is conveyed in a WAV file, neither of yaw (pan), pitch (tilt) and roll of the sound can be changed.

Although there are only 2 channels in a stereo WAV file, a stereo WAV file can contain the full spherical (3-dimensional) spatial information of sounds in the Full-Spherical Stereo Sound technology.

Some other clips are available.

Demo with a file "lost_species.wav" |

Demo with a file "orimoto1.wav" |

Demo with a file "haunted_sniper.wav" |

Features

Besides the interactive control of yaw, pitch and roll, this technology offers several unique features as follows.

[Panoramic beamformer]

Since the full spherical spatial information of sounds is contained in the stereo audio data stream, a directional beam can be formed programmatically during the playback by using the spatial information.

In the next demo clip, 6 speakers who surround the microphone system are reading 6 different texts at the same time as can be seen in Fig. 3.

Fig. 3 Six readers and the microphone system

Three males and 3 females are reading 6 different texts in an anechoic room.

There is a tetrahedral microphone system in the center of the room.

In this demonstration, you can toggle the beam patterns by using the 'BEAMFORMER' button on the bottom of the demo screen. There are 4 beam patterns that are 'OMNIDIRECTIONAL,' 'CARDIOID,' 'NARROW,' and 'SUPER NARROW.' Since direction of the beam is linked to the panning direction, you can direct the beam to any direction you want.

Open the clip by clicking the thumbnail below.

Demo with a file "recit6.wav"

(Thanks to Tokyo City University students)

As you may notice, a directional beam can be formed from a stereo audio data stream and directed to any desired direction. This feature is called by us 'Panoramic beamformer.'

[Underwater panoramic sound]

As described, full spherical spatial sounds can be captured by a 4-point recording. If these microphones used in the recording are replaced by hydrophones, panoramic sounds can be recorded even in the water, too.

Since the sound speed is much faster in water than in air, it has to be adjusted programmatically. Other than that, panoramic sound in water can be recorded and reproduced by almost the same procedure as that in air. Figure 4 shows the tetrahiedral hydrophone system used in our study.

Fig. 4 Tetrahedral hydrophone system

An underwater panoramic sound recording system consists of 4 omnidirectional hydrophones

(AQUARIAN AUDIO H2a-XLR). These hydrophones are placed at 4 vertices of a tetrahedron.

Each side of the tetrahedron is 50 mm long.

Sounds in the following demo clips were recorded in the water by using the tetrahedral hydrophone system. In these clips, the full spherical spatial sounds are reconstructed also from the data stream stored in stereo WAV files.

Demo with a file "turtle01.wav" |

Demo with a file "squal.wav" |

[Panoramic sound morphing]

When tetrahedral microphone systems are placed at 2 or more points in the scene, spatial property of sounds not only at these points but also points around them can be approximated by the method that we call 'Panoramic sound morphing.'

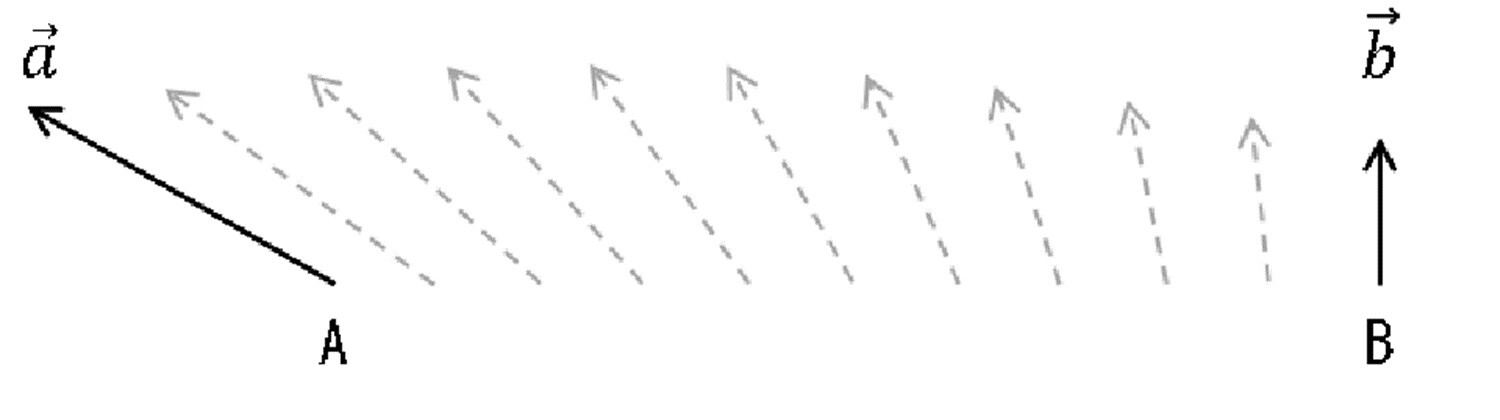

As mentioned previously, spatial properties of a wave at the observation point can be expressed by a vector for each frequency bin. If spatial property of sounds at point A and point B are known, for example, spatial sound at any point between them can be estimated by simple interpolation of the vectors for each frequency bin as can be seen in Fig. 5. By this technology, listeners can control not only panning but also the listening point seamlessly in the scene. This is the morphing of spatial sound.

Fig. 5 Estimation of spatial information

Spatial properties of a wave at any point between the measuing points A and B

can be estimated by interpolation of vectors.

Every wave source has to be far enough away from the sensor array

or in other words, the distance between the measuring points has to be close enough

compared to the distance of the wave sources from the sensor array.

Sound in the next demo clip was recorded by 2 tetrahedral microphone systems while 6 speakers were reading 6 different texts in an anechoic room (Fig. 6). Since there were 2 tetrahedral microphone systems, a total of a 8-track recording was conducted.

Fig. 6 Six readers and the microphone systems

Three males and 3 females are reading 6 different texts in an anechoic room.

Sound was recorded by 2 tetrahedral microphone systems.

Open the demo footage by clicking the thumbnail below. Operation on mobile devices is not guaranteed.

You will find 2 controllers on the left and right sides of the 'Beamformer' button. The one to the left is the Motion controller and the other is the Pan controller. By using the Motion controller, the listener can move his or her listening point.

Recitation by 6 individuals (Link opens itch.io page)

Demo with 2 stereo WAV files

(Thanks to Tokyo City University students)

Try also the following footage.

Cannon by Johann Pachelbel (Link opens itch.io page))

Demo with 2 stereo WAV files

Please note that only 2 audio files are used in each of the clips above and the listener can move in the scene around the measuring points.

[Backward compatibility]

To reproduce the full spherical spatial sound, a custom app or program is needed. On this site, for instance, a program in JavaScript is used to playback spatial sounds. Since stereo WAV files in the Full-Spherical Stereo are backward compatible with the conventional WAV format, even if the custom app or program is not available, the audio data stream can be played as the common one-dimensional stereo sound.